Above diagram explains hashing, we have our text “abc123” and after applying a hash function(SHA-1) we get fixed-size alphanumeric output which we called as a hash value. By using this hash value we cannot get back our original input text.

Fundamentals of Hashing

Hash functions are one-way: we cannot reverse a hash value to find the original content. (irreversible)

If we pass the same content through the same hash function then it should produce the same output/same hash value.

Imagine a scenario of storing passwords in software systems…

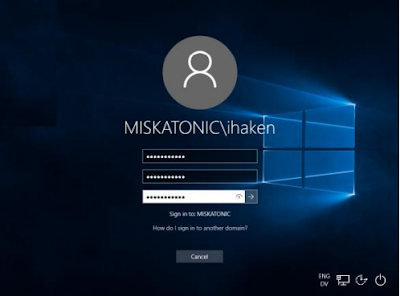

If we store passwords in plain text anyone who has access to the database can view all passwords and even can log in to the system using someone’s credentials. To overcome this we can use hashing.

Instead of saving plain text password, we can hash the password using hashing function(h1) and store the hash value.

In the above table john and sam has the same password “abc123” and after applying hash function both of them get the same hash value. Imagine john has access to the database and he can view the hash password. Then john can notice that his password hash value and sam’s password hash value are the same. So john will be able to login to the system using sam’s credentials. To overcome this we can apply the technique called salting.

Salted Hashing

In salted hashing, our goal is to make the hash value of the password unique, for that system generates a random set of characters called salt. When user enters plain text password, the generated random set of characters will be append to the plain text password. Then we sent the appended text to hashing function and get the hash value(salted hash). In this case, we have to store salt value for each user.

In the above table even though john and sam have the same password, hash value is different.

In the login process system gets salt value for the relevant user from the database and append it with input password and pass it through the hashing function and check the resulting hash value with the stored hash value in the table. If both hash values match, the user is authenticated.

Hash Collision

If two different inputs are having the same hash value, it is called a collision. Since hash functions have infinite input length and a predefined output length, there is a possibility of two different inputs that produce same hash value.

Here is an example that displays different content, yet has the same SHA-1 value. A team of researchers from CWI (Centrum Wiskunde & Informatica) and Google have managed to alter a PDF without changing its SHA-1 hash value.

http://shattered.io/

SHA-512 produces hashes that are longer than those produced by MD5, so it’s harder to find collision opportunities.

See the difference for yourself:

input text : “password”

MD5:

5f4dcc3b5aa765d61d8327deb882cf99

SHA-1:

5baa61e4c9b93f3f0682250b6cf8331b7ee68fd8

SHA-256: 5e884898da28047151d0e56f8dc6292773603d0d6aabbdd62a11ef721d1542d8

SHA-512: b109f3bbbc244eb82441917ed06d618b9008dd09b3befd1b5e07394c706a8bb980b1d7785e5976ec049b46df5f1326af5a2ea6d103fd07c95385ffab0cacbc86

Applications of Hashing

For password storage and authentication.

We have discussed this scenario above.

Integrity Protection.

Bob wants to send a message to Alice, but there is this middle man Darth who can modify the message from Bob to Alice. So, how can Alice verify that she receives the original message sent by Bob and it is not modified by someone during the communication?

What Bob can do is, after writing the message he can calculate the hash value of the message and send it along with the message. When Alice receives the message, she can again calculate the hash value for the message from the same hashing function used by Bob and check it with the hash value she received from Bob. If both hash values are equal Alice can verify that the message is not modified.

SSL Certificate Validation

HTTPS is reflected in the browser’s URL bar to indicate a secure connection while accessing secure websites. In SSL/TLS handshake process when client says hello, server sends its public key along with a certificate that asserts public key belongs to the server. If it’s a website(google.com) the certificate will contain domain name of the website. Basically certificate says something like public key(which sent along with the certificate) belongs to google.com. So, how do you check the validity of this certificate, that’s where hashing comes into play.

Here is the certificate of google.com. You can see the hash value of google.com certificate under Fingerprints section.